Chat applications are around us since decades, and they are the mainstream communication channels for both personal and business areas. The most common example may be Whatsapp by Meta. Whatsapp currently has 2 billion active users per month, and over 500 million daily active users worldwide.

Overall Design

Requirements

To gather requirements and scale of our application we can ask the following:

- What should be the scale of the application?

Let’s continue with the current stats of Whatsapp, 500 million daily active users. Also, let’s assume each user sends 30 messages per day on average.

- Should we support group conversations?

Yes, there will be one-to-one and group conversations.

- Should we support media, such as images and videos?

Yes, users should be able to send media as well.

- Should we store chats permanently?

For now, let’s do not store delivered messages.

- Should we support message delivery status?

Yes, users should be able to see delivered, received, read status of messages.

- Should we support user activity?

Yes, users should be able to see last seen of other users.

So, after we requirements, we can list them briefly as:

Functional Requirements

Application must support:

- both one-to-one and group chats.

- message status updates.

- last user activity.

- media messages such as images and videos.

- not storing chats permanently.

Non-functional requirements

According to CAP Theorem

- There will be a large amount of data transactions, i.e billions of messages per day. So, we will need partition tolerance.

- Between consistency and availability, we must have consistency because messages should be in real-time and preserve order. Otherwise, there will be missing messages and miscommunication which is the worst scenario.

- There should be no loss in data, i.e. system must be reliable so that no messages are lost.

- System should be highly available and resilient.

System Estimations

Traffic

On average, there will be 500M active users per day and 30 messages per user. So,

500M users * 30 messages = 15B messages per day = 180k messages per second.

However, traffic is not static for the whole daytime. Let’s assume our peak traffic is around 10M messages at a second.

Storage

Since we only have to store undelivered messages, let’s assume only 10% of messages are not delivered and we store those messages for 30 days.

Also, assume that each message requires 10 KB data on average, since most of the messages will be text, this would be a fair assumption. Then,

15B messages * 10 KB per message = 15 Petabyte message data

10% of them are undelivered, so 15 PB / 10 = 1.5 PB storage per day

1.5 PB * 30 days = 45 PB storage is required.

However, messages are not the only data to be stored. We will need to store user data such as which server they are connected, their last acivity timestamp. Assume each entry takes around 1 KB and half of the monthly users, i.e. 1 billion, are active at a time. Then we will need,

1 KB * 1 billion = 1 TB

storage for keeping user status. However, this may be kept in a distributed cache on chat servers.

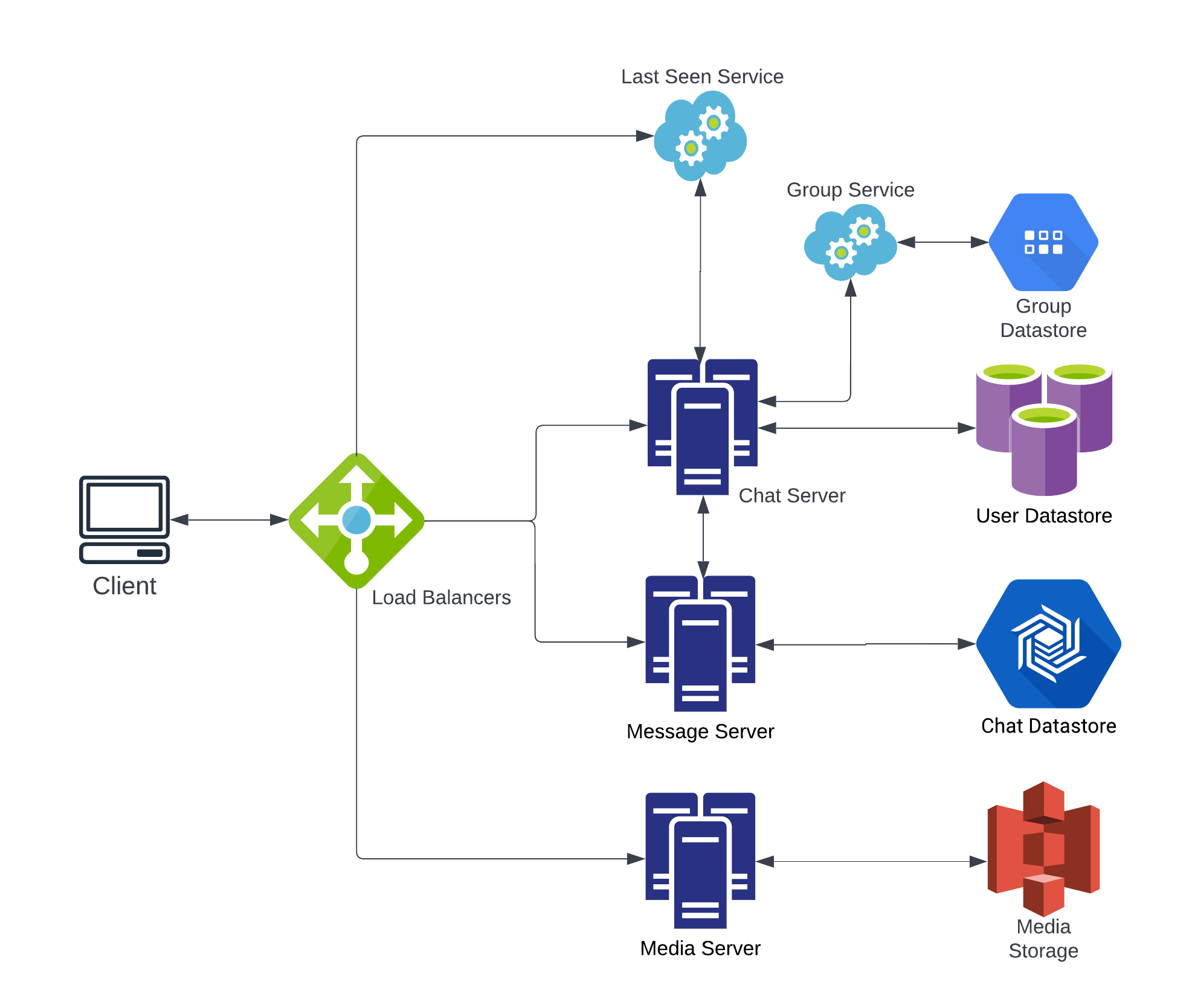

High Level Design

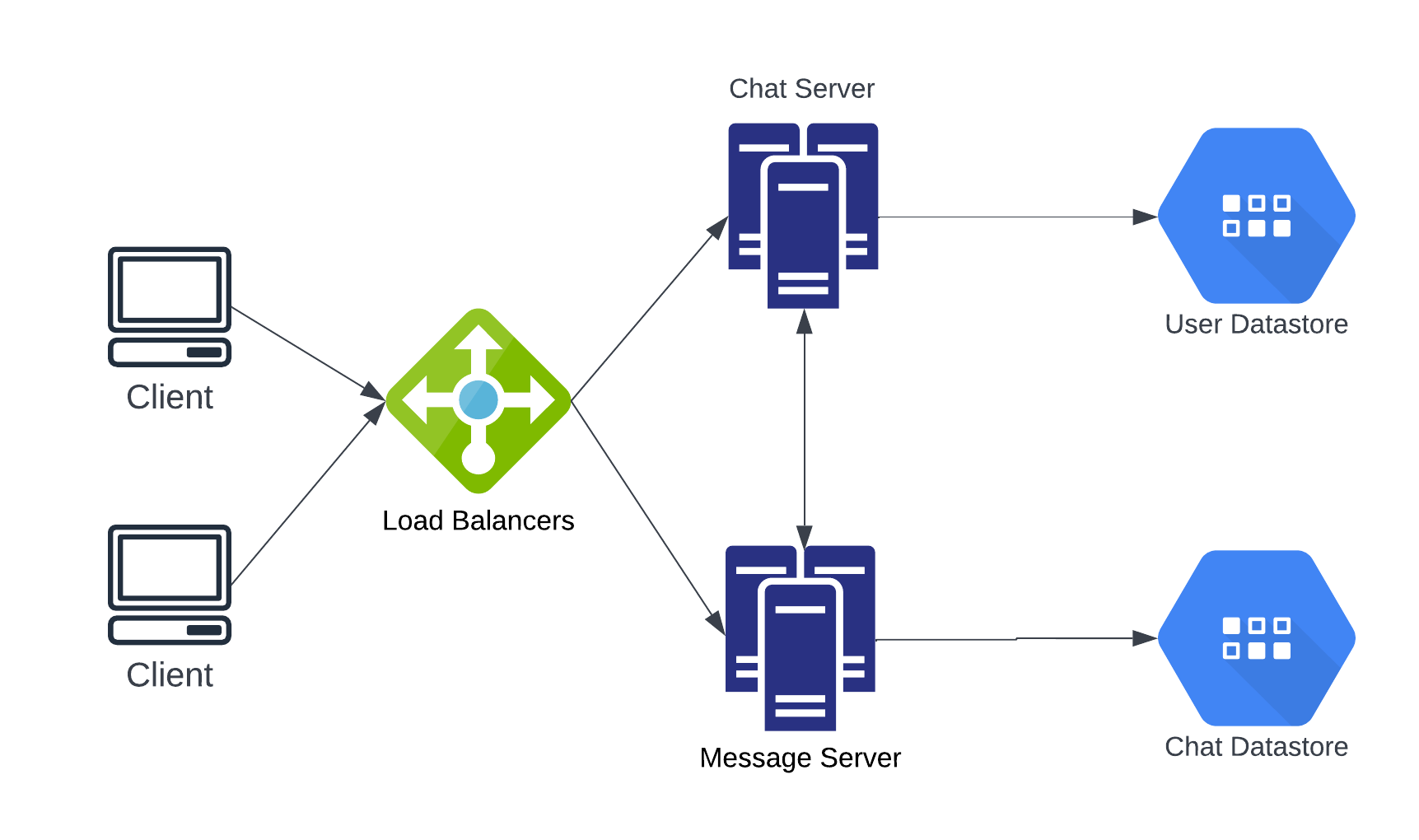

We can model the required functionalities with two microservices, chat and message servers. Chat server will be responsible for delivering messages to online users. It directly interacts with user datastore to check if the receiver is online and which server it is connected to. If user is offline, then the message is sent to message service to store it temporarily in chat datastore until the receiver is online.

We can calculate the number of servers needed for chat service by:

messages per second * latency / concurrent connections per server

Assume that each server can handle 100K concurrent connections, and message latency is around 50 ms. Then:

10M mps * 0.05 second / 100K connections = 5 servers needed

Same calculation also applies for message service as well.

API Design

POST /messages

Body:

receiver_id: id of a user or a group

sender_id: id of the user sending the request

content: text, image or video content (media content may be given as downloadable object url)

metadata: metadata for analytics such as device model, timestamp etc.

GET /messages

Query:

user_id: id of the user who is fetching messages

Low Level Design

Datastore

Since we need partition tolerance and consistency, we can use BigTable to store undelivered messages. We can also implement a FIFO-based stream from this datastore to deliver messages to users while preserving the order of messages.

For user datastore, we simply need to store user ID, last heartbeart time and connected chat server ID. We need heartbeart time for checking user activity to implement last seen feature. So, we can use a distributed cache like Hazelcast or Redis.

How to Handle Messages

When userA establishes a connection with the server to send a message to userB, userB has two options for getting this message from the server:

-

Client requests server to get messages periodically.

-

Client establishes a keep-alive connection with server and server pushes messages to client when messages are arrived.

Since the second approach requires less resource and ensures lower latency, we will proceed with server-push approach.

How to Handle Connections

With server-push approach, there are two ways to establish a connection between client and server.

-

WebSocket which is a communication protocol. It provides duplex communication channels over a single TCP connection. It’s ideal for scenarios such as chat applications due to its two-directional communication.

-

Server-sent events (SSE) which allows a server to send “new data” to the client asynchronously once the initial client-server connection has been established. SSEs are more suitable in a publisher-subscriber model such as real-time streaming stock prices; twitter feeds updates and browser notifications.

Hence, we will proceed with Websockets for our chat application.

How to Send Messages

With server-push method, the server maintains an open connection with several active users at the same time. How will it pick which process to send an incoming message to?

We will need a hash table to map the clients onto the thread that’s open for them. This hash table will map the user ID to thread ID. Once the server scans the table to locate the thread ID for the intended user ID for an incoming message, it sends the message through that open connection.

If receiver is offline, then our chat server will not find any entry for that user in hash table. Then, chat server will send this message to message service as we discussed before. Message service will store this message in chat datastore until the receiver is online.

Message Delivery Status

Let’s denote two users and two chat servers as userA, userB, serverA, and serverB

and userA sends a message to userB.

Single Tick

Once the message from userA delivered to serverA, serverA will acknowledge userA that message reached and userA will get a single tick.

Double Tick

Once the message from serverA is sent to userB with a valid connection between serverA and serverB, userB will acknowledge serverB that message is delivered. Then, serverB will acknowledge serverA, and serverA acknowledge userA.

Blue Tick

Once userB opens the application and reads the message, it will acknowledge serverB, and the rest of the flow remains the same.

Last User Activity

We will have another microservice that sends heartbeats periodically to chat servers when user is connected. Let’s say user pings this service every 5 seconds and once chat server receives this heartbeats, it will update last heartbeat timestamp of user in user datastore.

Sending media content

Since we websocket threads are lightweight, they are not suitable for sending large objects. Instead, we will have a media service which is a HTTP server. Media service will be responsible for uploading media content to a permanent storage like Amazon S3 and return the unique downloadable url. After that, user will send the url as text message and the receiver will obtain the media content from the url.

Group Chats

Let’s have another service called Group Service that keeps group IDs and member user IDs. So, there will be a one-to-many mapping from group ID to user IDs. If a member of a group wants to send a message, Group Service will retrieve all member IDs and then chat server will send messages to all servers that users are connected to.

Bottlenecks

Chat Server Failure

In case that a chat server fails, we should have multiple idle chat servers waiting. Let’s say for each 4 chat servers we have, we have 1 idle server for backup.

So, if a chat server fails, users that are connected to that server will automatically connect to another available server and their chat server ID on user datastore will be updated. We can have a minimal service on client device that periodically keeps tracks of available chat server IDs.

Chat Datastore Failure

We can have a replica of our chat storage. So, any queries on chat datastore will also be applied on the replica as well. If messages on datastore are delivered to users, then the message should also be removed from replica either.